During the last quarter of 2021 you might be forgiven for not concentrating fully on the Return in Investment (RoI) from any training and development you organised. Indeed, some Human Resources (quite rightly) see Learning & Development (L&D) as an integral part of the people strategy and, as such, probably do not track the results of L&D as closely as they could. That’s not saying that they should, just they could track the outcomes more closely.

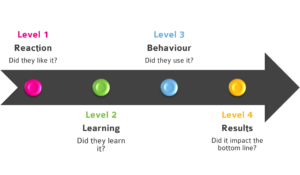

In 2017, I attended a programme in Stockholm that was organised by our good friends at PROMOTE® International. It examined ways in which we can evaluate our L&D activities. The underlying framework was the Kirkpatrick Partner’s 4 Levels of Training Evaluation.

I’ll be honest here and say that it did feel like it was just going to be a relatively expensive way of confirming what I already knew about the framework. It turned out to be rather revelatory and here’s why.

Misunderstanding around the ‘Levels’

I was very pleased to see that the lead facilitator for the session was Jim Kirkpatrick, son of Donald Kirkpatrick – the creator of the framework. Jim shared with the group that his father had never intended that the four stages were ‘levels’ and his original thoughts were surrounding the question ‘How are the 4 elements related?’ – It is my understanding that, at least in part, the relationship between the 4 elements lead to them being called ‘levels’. Further, the gold standard of evaluation was to have meaningful measures for all four, but each had an effect on the one above. Let me explain.

The importance of the links

We studied the probable links between each of the levels before Jim shared with us that the ‘links’ were not as straight forward as we thought. Indeed, the link between Level 1 (Reaction to learning) was strongly linked to peoples’ learning (Level 2). No surprise there! If people enjoy the learning, the more they should be able to recount the learning they’ve undertaken – at least in the short term! There is also a strong link between a change of behaviour adopted as a result of the learning (Level 3) and the subsequent results that the individual or organisation experience (Level 4). Again, this should come as no surprise to any seasoned L&D professional. What may come as a mild surprise is that there is often a common flaw in the way L&D conducts its evaluation of learning events and interventions, and it is caused by the weakest link (and not the TV quiz show). The weak link is between Level 2 and Level 3. That is, putting your learning into practice!

What gets in the way?

There are many reasons for not applying your learning and making behavioural changes; lack of opportunity, lack of managerial support, peer/team feedback, lack of immediate results – the list goes on. The fact remains that if we are to bridge these blockers you need to think differently about what you measure. You should start on the top line: money, employee satisfaction, client satisfaction, quality, sustainability etc. – the Key Result Areas (KRAs) for most businesses, at least the successful ones.

What we do

You should start with the end in mind by asking “If the learning intervention is successful what key result areas do you expect to see an uplift?” You’ll notice that this is very much a level 4 question. You can even set a benchmark (‘Where are you now?’), an uplift target (‘Where do you want to be?’) and if you are to follow the ‘T’ of SMART, ‘by when?’. And ‘Yes’ I know you’ve probably heard all that before.

“So where is the revelation?” I hear you cry.

It’s not in the fact that you should start with the end in mind, but in what you can physically measure in a relatively short timeframe. Most organisations want to see results immediately (Level 4). Within my area of expertise namely: supervision, leadership, management & influencing, to wait for results to come through is ambitious. Indeed, in most cases there is a lag between the training and seeing the effects of it. Things like ‘bottom line profit through more effective leadership’ or ‘increased employee retention’ do take time to manifest themselves.

What you can measure is any immediate behavioural changes and (crucially) whether they ‘stick’ over time. You can then use the strong link between Levels 3 & Level 4 to ‘guarantee’ that, so long as there are no other significant influences, you have achieved what you set out to do i.e., strategically support the business with targeted learning plans and proven behavioural change in the target population. You should then look at the leadership of the target population to see what ways you can support them in making the behavioural changes ‘stick’. More often than not, we find that this is by far the most challenging part!

We much prefer the modular approach to single event learning. We find that the modular approach significantly reinforces the previous learning before moving on to the new stuff. And there’s no harm in asking your participants to present what they’ve learnt, and how have they used it, to the rest of the cohort! It focusses the mind on the application part.

‘But What about the other levels?’

In designing the programmes, you should ‘take care’ of levels 1&2 with things like online knowledge checks (Level 2), in programme evaluations (levels 1 & 2) and in doing so you can adjust your approach to maximise what the participants are learning. In that way you can’t guarantee results, but you can prove that people are doing things differently as a direct result of the programmes you put your employees through.

So why the title of the blog?

With many organisations beginning to plan their L&D strategies for the coming financial year (or perhaps you’ve already got your plan in place if you use a calendar year) you can make sure your plan is strategically aligned by asking yourself one simple question – “In order to fulfil our part of the strategy, what do we need our people to get better at?”